TL DR;

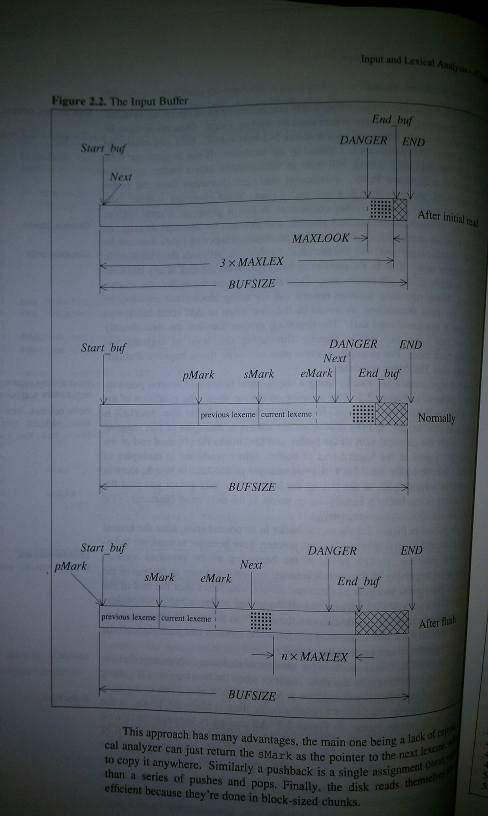

I am a Python guy. I write Python at work, I write Python for fun, and I've even dabbled with writing Python outside in the fresh air. Someday I hope I can plug a keyboard into a Kindle and actually code outside comfortably. I've also been reading a textbook called Compiler Design in C lately. I've just gotten to the part where the author describes a relatively complex way of reading files with a minimum of copying.

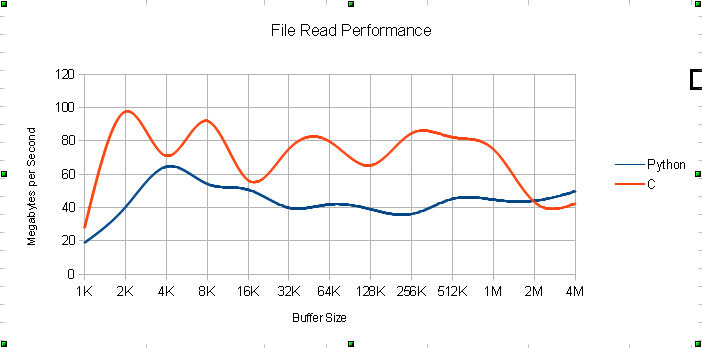

I am a Python guy. I write Python at work, I write Python for fun, and I've even dabbled with writing Python outside in the fresh air. Someday I hope I can plug a keyboard into a Kindle and actually code outside comfortably. I've also been reading a textbook called Compiler Design in C lately. I've just gotten to the part where the author describes a relatively complex way of reading files with a minimum of copying. (Coming from a background that rarely cares about performance being better than "good enough," it's different to be reading about designing for high performance in the first place.)WHY??In the text the author claims that "MS-DOS read and write times improve dramatically when you read 32k bytes at a time." I had to test this, and I figured I could pit C vs. Python in a very shallow, distorted way at the same time.The SetupI originally did this test reading the same small file chunk over and over again, but I realized that this probably takes advantage of OS caching and becomes a test of this caching rather than of the speed of the two languages.So I set up an 8GB file, filled with the string "0123456789ABCDEF" over and over and over. Then, for each buffer size, the two languages do 2000 sequential reads of the file.PitfallsSequential and random reads are known to produce different characteristics. It would probably have produced better results if I had done a series of random reads instead of sequential ones.2,000 iterations is not really enough iterations to establish behavior solidly, but I didn't actually think of doing random reads until just now, and there was no way I was going to set up a 40GB file so that I could do 10,000 reads of 4MB each.I didn't do a whole lot of research into the buffering modes that Python offers for doing file reads. Some of those would make a difference. I have a feeling that normal file reads are internally buffered and copied at least once. That's a huge advantage for C, because read() is purported to allow the OS to copy straight from disk into your buffer if the buffer is the right size. At least it was allowed in 1990 when this book came out.The ResultsSo vanilla Python reads are half as fast as C's read(). Big whoop. I was expecting much worse, perhaps 5-7x slower. At least on Windows 7, these limited benchmarks indicate an optimal C buffer size somewhere between and including 32K and 1M. I'm convinced that the high read speeds below 32K for C would disappear entirely if I were doing random reads.For Python, I'm not sure what to recommend. The highest speeds were with 4K, but that just seems too low to make sense. More research required.The StuffThe Excel SpreadsheetThe Code

(Coming from a background that rarely cares about performance being better than "good enough," it's different to be reading about designing for high performance in the first place.)WHY??In the text the author claims that "MS-DOS read and write times improve dramatically when you read 32k bytes at a time." I had to test this, and I figured I could pit C vs. Python in a very shallow, distorted way at the same time.The SetupI originally did this test reading the same small file chunk over and over again, but I realized that this probably takes advantage of OS caching and becomes a test of this caching rather than of the speed of the two languages.So I set up an 8GB file, filled with the string "0123456789ABCDEF" over and over and over. Then, for each buffer size, the two languages do 2000 sequential reads of the file.PitfallsSequential and random reads are known to produce different characteristics. It would probably have produced better results if I had done a series of random reads instead of sequential ones.2,000 iterations is not really enough iterations to establish behavior solidly, but I didn't actually think of doing random reads until just now, and there was no way I was going to set up a 40GB file so that I could do 10,000 reads of 4MB each.I didn't do a whole lot of research into the buffering modes that Python offers for doing file reads. Some of those would make a difference. I have a feeling that normal file reads are internally buffered and copied at least once. That's a huge advantage for C, because read() is purported to allow the OS to copy straight from disk into your buffer if the buffer is the right size. At least it was allowed in 1990 when this book came out.The ResultsSo vanilla Python reads are half as fast as C's read(). Big whoop. I was expecting much worse, perhaps 5-7x slower. At least on Windows 7, these limited benchmarks indicate an optimal C buffer size somewhere between and including 32K and 1M. I'm convinced that the high read speeds below 32K for C would disappear entirely if I were doing random reads.For Python, I'm not sure what to recommend. The highest speeds were with 4K, but that just seems too low to make sense. More research required.The StuffThe Excel SpreadsheetThe Code

What can I say… go C! XD

That's an interesting test you did there. Thanks for sharing.My little brother once made a program that wrote the current date and time to a log. At least a quarter of my hard disk is used by that useless text.

Iasper: Truncate that log. =/

Also, screw Python. Just one more reason to hate it. >:(@lasper That reminds me of the time I made a program that generated empty text files on my high school computers. Ended up making gigs worth of empty text files. I showed it to my IT teacher cos I thought it was cool, but he thought I was making a virus and was shocked at me .___. At least I didn't get in to too much trouble

I did that too.

Got extra credit.Didn't help my overall grade though.What does FSX have against Python? XD

The lack of curly braces, I think. Or at least that's what he was saying while drunk.

The lack of a clear structure is more like it.

Of course, depending on which C/C++ functions you use for the IO, you will gain different results. Inline assembly still beats the DOS calls hands down (If you know how to negotiate with the OS for direct device access, which is far easier in a Linux based system than in Windows).Good job on those stats though. It's more than I'd bother doing, TBH. :3